You Can’t Stop an Ambitious Person!

-

Tam Zamanlı Girişimcilikte üçüncü yılım – Full-time Entrepreneur

Girişimcilik hayatın apayrı bir lezzeti. Çok şanslıyım ki ben bu lezzeti tadabilen nadir kişilerdenim. Evet önceden girişimci sayısı çoktu, ancak günümüzde üniversiteden mezun olup şirketlerde çalışıp girişimciliğe soyunan kişi sayısı o kadar az ki…

-

Gaslighting: Gerçekliğinizle Oynayan Sessiz Şiddet

Gaslighting’in ne olduğunu ilk kez duyduğumda, bu kavramın benim yaşadığım bazı şeylerle bu kadar örtüşebileceğini hiç düşünmemiştim. Ama öğrendikçe, “Bu bendim” dedim. Yıllar içinde, hiç farkında olmadan bu manipülasyonun tam ortasında kalmıştım. Peki nedir bu Gaslighting? Gaslighting, bir kişinin karşısındakini sistematik olarak gerçekliğinden şüphe ettirmesiyle tanımlanıyor. Bu bir çeşit psikolojik manipülasyon. Yani biri, bilinçli bir…

-

Mükemmel Hissetmek – Ana Odaklanmak

Hiçbir sebep, seni mükemmel hissetmekten alıkoyamaz. Sağlığın mı kötü? Düşünme. Bir arkadaşın canını mı sıktı? Bak keyfine. Kafanı başka konulara, seni güçlendirecek odak alanlarına çevir ki, hem sen güzelleş, hem de etrafın güzelleşsin. Düşünmek tabi ki iyi, ancak bunu fazlaca yapmak herkesin alışkanlığı olmuş durumda. Eckhart Tolle‘nin, “The Power of Now” –adlı kitabı modern spiritüel…

-

Success Without Personal Values Is Not Sustainable

Who we are as individuals is shaped by the experiences we gain from our parents, upbringing, and the role models we follow. Of course, environmental factors also play a role, but at the core, it is our parents who mold us. Why is this so important? Because as we grow up, we build a life—one…

-

Remote Team Collaboration: Best Practices I’ve learned at Pivony

Even though I’m not a fan of remote work settings, in today’s reality, especially for small startups, finding productive team members and bringing them together in one office is like walking to the sun. You will burn more as you make an effort to make it happen. So let’s face reality and adapt yourself in…

-

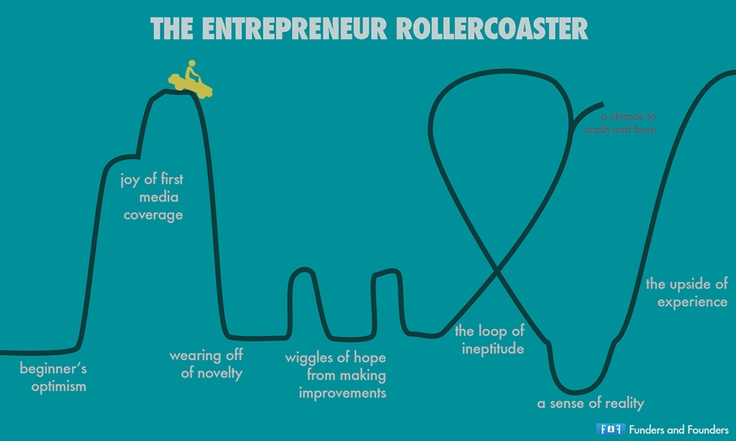

Bir startup ne zaman başarısız olur?

a. Ortaklar arası anlaşmazlıklar b. Şirketi devam ettirecek para kalmaması c. Ürün pazar uyumu yakalanamadığında d. Ortakların enerjisi tükendiğinde Doğru cevap d. Diğer tüm şıklar, ortakların enerjisi var ise çözülebilir. Anlaşmazlıklar karşılklı tartışarak çözülebilir. Şirketi devam ettirecek bütçe kalmadıysa ortaklar tüm enerjilerini para bulmaya ayırarak fikre küçük yatırımcıları ikna edebilir. Ürün pazar uyumu yoksa ortaklar…

-

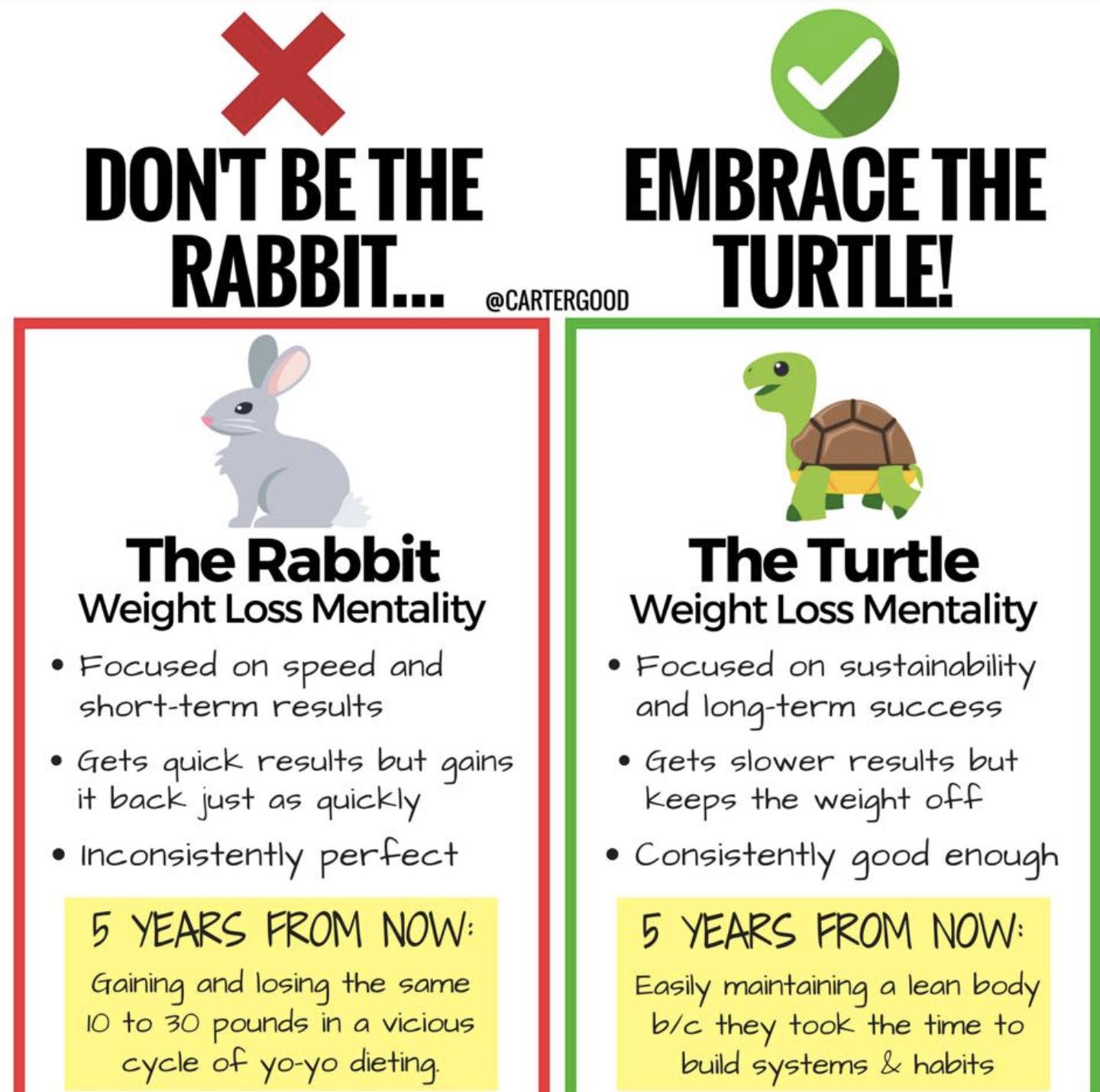

Psikoloji Savaşları – Turtle Mindset

Sizi etkileyecek, yarı yolda vazgeçmenize yol açabilecek yüzlerce sebep var. Ancak başarmak için de binlerce sebep bulmak mümkün. Dolayısıyla mesaj net. Beyninizin bizi manipüle etmesine izin vermeden hedefe doğru bazen çok hızlı, bazense kaplumbağa adımlarıyla ilerleyeceksiniz. Turtle mindset’e göre ufak da olsa kararlı adımlar atmak sizi diğerlerinden daha hızlı hedefe yaklaştırır. Bu yaklaşım, Weight loss…

Get Engaged with a Free 1-1 Exploratory Conversation